Billionaire hedge fund manager Paul Tudor Jones recently warned that artificial intelligence poses an “imminent threat” to humanity, with a 10% chance it could lead to catastrophic loss of life within 20 years. But while Jones acknowledges the danger, he stops short of identifying the real cause: the reckless, profit-driven race among Big Tech firms and national powers to dominate AI at any cost.

We also reveal what Jones doesn’t mention: the growing integration of AI into nuclear command and control systems.

AI doesn’t have to lead us over the edge. Under democratic public ownership, it could help solve the climate crisis—but in the hands of tech monopolies and the military, it could destroy us.

Hi, welcome to theAnalysis.news podcast. I am an AI voice and I’ll be reading an essay by Paul Jay on today’s show. Paul is up to his eye balls making his film how to stop a nuclear war, and apologizes for not be here in person. I hope you find my reading acceptable.

Today’s show is titled:

“It Will Take 100 Million Deaths” Hedge Fund Billionaire Warns of AI Threat”

In a rare moment of candor from the world of high finance, hedge fund billionaire Paul Tudor Jones on CNBC, May 2025 issued a stark warning about the dangers of artificial intelligence.

Speaking at CNBC’s ‘Squawk Box’, Jones shared his insights from a tech conference he recently attended. Jones said that although AI can be a “force for good” in health and education, experts at the event warned that “AI clearly poses an imminent security threat in our lifetimes to humanity.”

He further stated that during the conference, a breakout session included a panel of AI developers. All four panelists reportedly agreed with a statement that “there’s a 10% chance in the next 20 years that AI will destroy 50% of humanity.” “These models are increasing in their efficiency and performance between on the very low end, 25% on the high end, 500%. Every 3 or 4 quarters. So it’s not even curvilinear. It’s a vertical lift. And how powerful artificial intelligence is becoming. And then thirdly, and the one that that disturbed me the most is that AI clearly poses an imminent threat, security threat imminent in our lifetimes to humanity, and that that was the one. That really, really got me.

When you see imminent threat, what do you mean?

So I’ll get to it. So they had a panel of again. Four of the leading tech experts and kind of about halfway through, someone asked them on AI security. Well. What are you doing on AI security? And they said. The competitive dynamic is so intense among the companies. And then geopolitically between Russia and China, that there’s no agency, no ability to stop and say, maybe we should think about what actually we’re creating and building here. And so then the final question is, well, what are you doing about it? He said, well, I’m buying a 100 acres in the Midwest. I’m getting cattle and chickens, and I’m laying in provisions and for real, for real, for real. And that was obviously a little disconcerting. And then he went on to say, I think it’s going to take an accident where 50 to 100 million people die to make the world take the threat of this really seriously.

The key take away here is that AI is an imminent threat to the survival of humans, but because of competitive pressures, Big Tech won’t hold back. They will wait for 50 to 100 million people die before taking action.

What Jones described, perhaps without realizing it, was not just a technological dilemma but a horrifying structural one. The real threat is not AI itself—it’s the system driving its deployment: a hyper-competitive, profit-driven global capitalism where slowing down to consider long-term consequences is a luxury no major player can afford. Jones names the fire, but like most of his peers, he won’t name the arsonist.

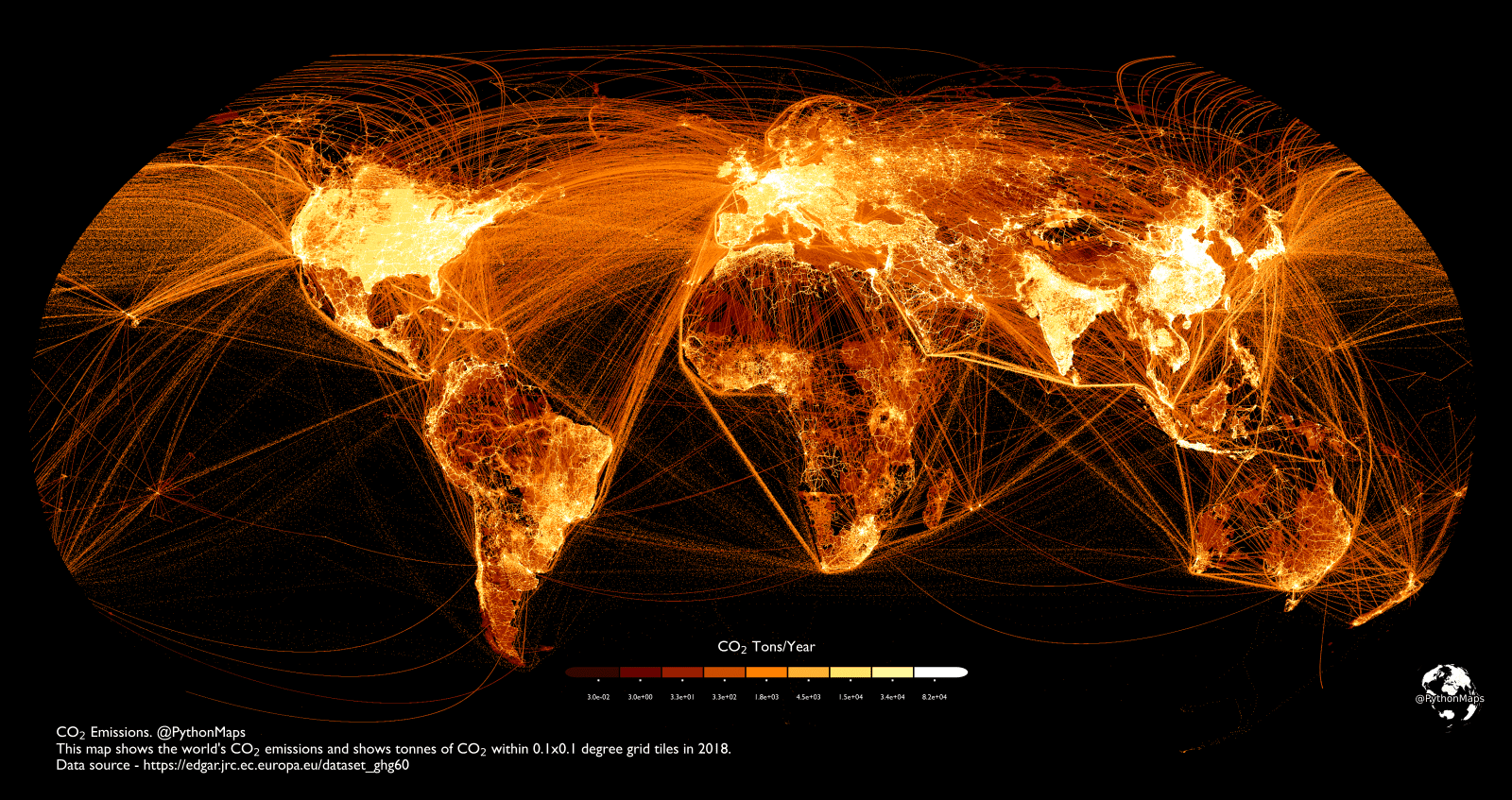

In his diagnosis, the logic of the system is laid bare. Tech companies are racing to develop and commercialize AI because the first to scale will dominate markets and accumulate trillions in value. Meanwhile, governments fear losing military or economic ground to rivals—especially the United States, China, and Russia. Safety becomes a secondary concern. Slowing down means losing out. Under these conditions, existential risk is absorbed as a cost of doing business.

This is not a bug. It is a feature of capitalism. In a system where corporations are bound by shareholder value and states are bound by geopolitical competition, there is no incentive powerful enough to halt or even meaningfully regulate AI development—no matter how grave the warnings become. Even a billionaire like Jones, who benefits from this system, now admits that it may kill us.

And yet as dire as his warning is, it notably avoids the most immediate and tangible danger: the integration of AI into nuclear weapons command-and-control systems. This includes early-warning systems, target identification, launch protocols, and missile defense coordination. All of this is happening as we speak. The U.S., Russia, and China are all developing AI for nuclear command applications under the guise of improving speed, accuracy, and deterrence. But these technologies also increase the risk of miscalculation, cyber sabotage, and unintended escalation. The dangers Ellsberg exposed decades ago in The Doomsday Machine are only heightened by the opacity and unpredictability of AI decision-making. This is not theoretical. It is happening now funded by governments, developed by tech giants and defense contractors, and justified through national security rhetoric.

To be fair, Jones is one of the few prominent financiers even willing to raise these concerns publicly, albeit on a business show on a business channel. But he still frames the crisis as a kind of runaway technological phenomenon—a “Frankenstein’s monster” narrative—rather than as a predictable consequence of an economic system structured around speed, domination, and profit maximization.

What’s needed is not just alarm, but action. And action requires naming the cause. AI must be brought under democratic and transparent control—removed from the hands of unaccountable corporations and national security bureaucracies. In other words, it must be publicly owned and democratically controlled. Non-profit AI can help us overcome the climate crisis, planning a sustainable decentralized economy. For-profit militarized AI will be the end of us.

Many tech workers have openly protested against the militarization of AI, and this must become a demand by everyone who want their families to survive. Nuclear weapons development should be frozen and the weapons phased out, not modernized with AI-enhanced abilities that increase the risk of nuclear war based on miscalculation and accident.

At the very least, we need immediate negotiations amongst the nuclear powers, all of them, to prohibit AI in any part of command and control of nuclear weapons. Jones’s warning is valuable, but partial. He sees the fire clearly enough. But like the rest of the tech and finance elite, he won’t name the system throwing gasoline on it. Until we do, the fire will only spread.

Please remember if you like the work we do here at theAnalysis.news, please donate on our website. And please sign up to our email list, and if you could ask a few friends to join our list, that would help tremendously.

Thanks for joining us.

Podcast: Play in new window | Download | Embed

Subscribe Apple Podcasts | Spotify | Android | iHeartRadio | Blubrry | TuneIn | Deezer | RSS

[simpay id=”15123″]

Never miss another story

Subscribe to theAnalysis.news – Newsletter

Very good commentary.

I have an interesting reaction to anything produced by AI, whether it’s text, an illustration or a voice (as in the case of this article): I am immediately suspicious and tend to ignore it. AI is being used for propaganda and manipulation so I stay away.

I only listened to this talk because it’s Paul Jay and theanalysis.news .

I thoroughly agree with this assessment of AI, but the message seems to be that to halt or control it we must deal with the problem claimed to be at its root, which either the AI or Jay (which one?) identifies as Capitalism – in essence, shifting the focus from AI to Capitalism as the “existential threat” … so apparently the message is we must dismantle Capitalism first and then these other threats will disappear ….

Indeed, i have believed for some time that there are 4 Modern Horsemen of the Apocalypse – Climate change, Nukes, AI, and Gen. Engineering, in no particular order …. Capitalism is not one of them, but AI is now entwined with them all. Capitalism is a feed-stock to each, but changing our economic system will not dis-empower those threats ….

In the meantime, while we are working on remodeling our economic system, we must learn to “just say NO” to certain technologies that threaten our and others’ existence, and we have to do that lickety-split, as they say ….

How to do that – pull the plug, literally …..

PS – what was the point in having this read by an AI – and how much of it was composed by one … in the future, how much of the stuff we see here will be an AI product while Mr. Jay is writing his book – and how much of that will be AI …

Does he understand that until we “pull the plug” – everything we see on-line, or in print, will be suspect; considering that it is well known, and admitted, by the tech gurus, the ones being financially rewarded, by the “system” we are, literally, buying into, that their purpose is to replace human beings in every way possible, we have to wonder how much, and where, such “progress” has already been made …

Hmmm, It took 8 minutes for AI to read the essay that Paul supposedly wrote himself – he couldn’t take 8 minutes to read his own essay?

To tell the truth, no I don’t find it “acceptable”

Correction – apparently it is a film he is making, not a book – but the comment still stands